Introduction

What is continuous-eval?

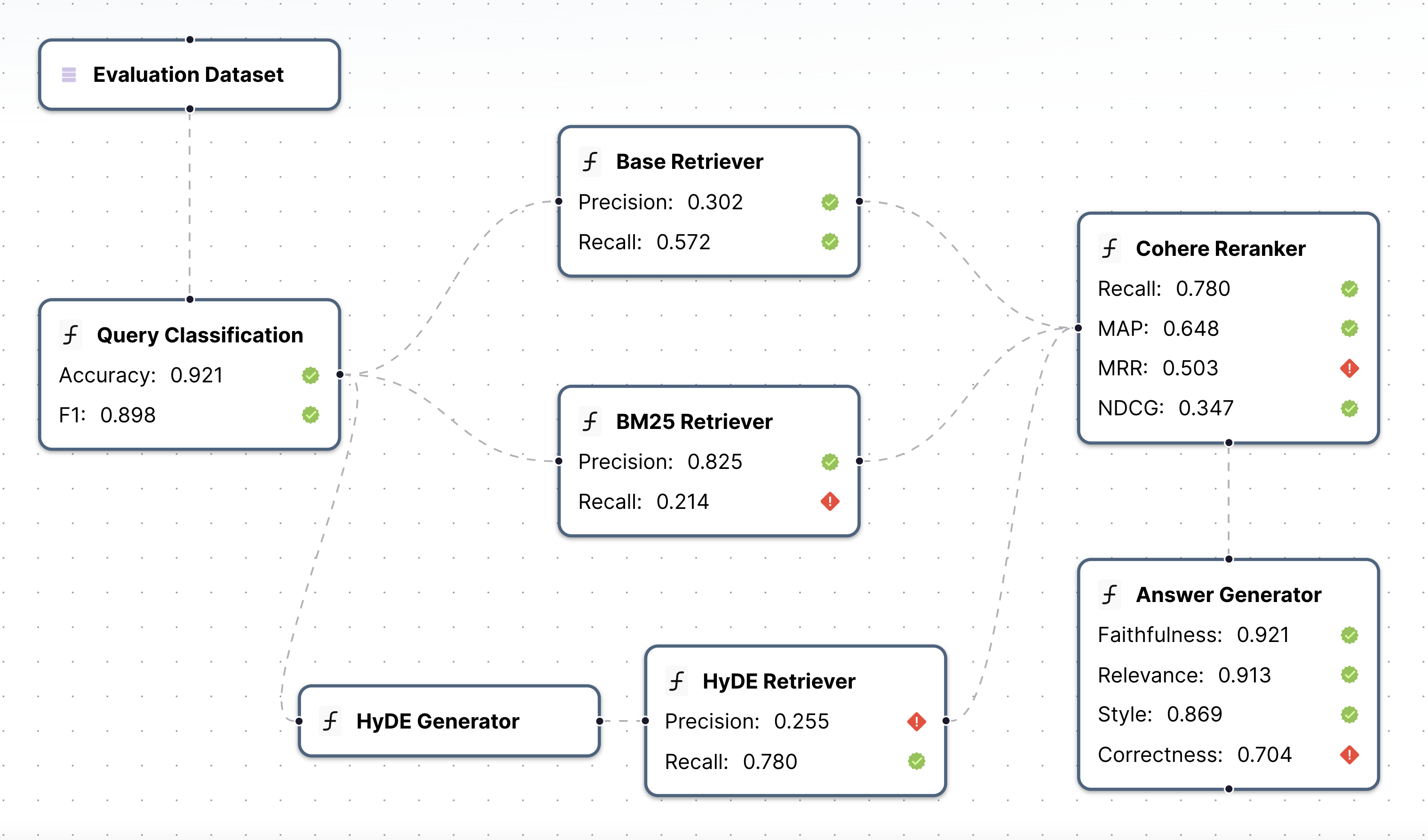

continuous-eval is an open-source package created for granular and holistic evaluation of GenAI application pipelines.

How is continuous-eval different?

-

Modularized Evaluation: Measure each module in the pipeline with tailored metrics.

-

Comprehensive Metric Library: Covers Retrieval-Augmented Generation (RAG), Code Generation, Agent Tool Use, Classification and a variety of other LLM use cases. Mix and match Deterministic, Semantic and LLM-based metrics.

-

Leverage User Feedback in Evaluation: Easily build a close-to-human ensemble evaluation pipeline with mathematical guarantees.

-

Synthetic Dataset Generation: Generate large-scale synthetic dataset to test your pipeline.

Resources

-

Blog Posts:

- Practical Guide to RAG Pipeline Evaluation: Part 1: Retrieval

- Practical Guide to RAG Pipeline Evaluation: Part 2: Generation

- How important is a Golden Dataset for LLM evaluation? link

- How to evaluate complex GenAI Apps: a granular approach link

- How to make the most out of LLM production data: simulated user feedback link

- Generate synthetic data to test LLM applications link

-

Discord: Join our community of LLM developers Discord

-

Reach out to founders: Email or Schedule a chat